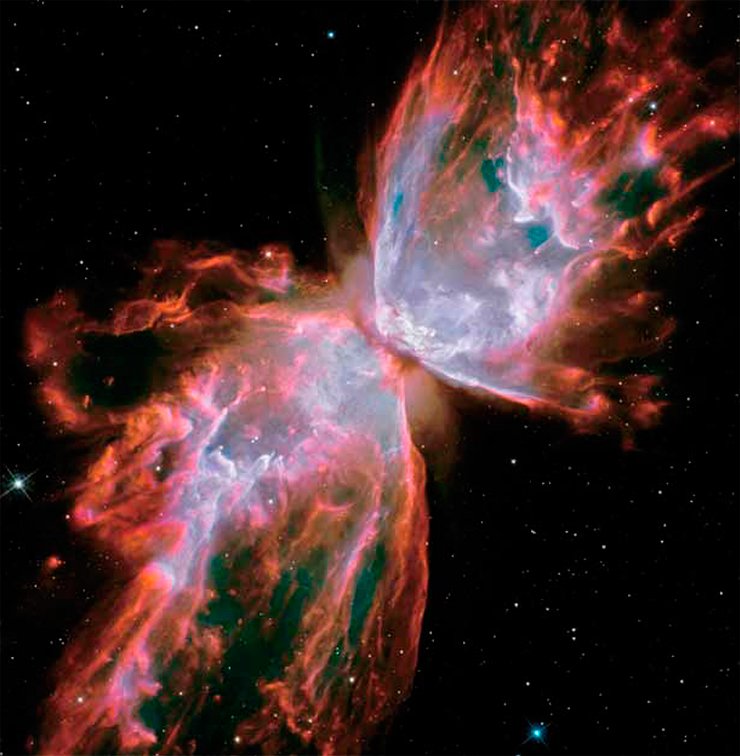

Dark Side of the Universe

The brightest minds of humanity have always struggled with the question of how the Universe works. Eventually, the joint efforts of theoreticians and experimentalists have created a consistent picture based on elementary particle physics. According to modern views, ordinary matter makes up less than 5% of all mass in the Universe; the rest is the so-called dark matter and dark energy. There are several models of dark matter; therefore, the key to searching for dark matter particles is to create a method for “finding the unknown.”

Today researchers at major science centers worldwide are designing detectors to capture the elusive dark matter particles by applying different physical principles. Siberian physicists are looking for is the so-called cold dark matter: having been slow at first, its particles have now accelerated in the galaxy’s gravitational field to one thousandth of the speed of light. The range of possible masses of these particles is extremely wide: they might be both three orders of magnitude heavier and twelve orders of magnitude lighter than protons. The majority of physicists believe that dark matter particles must be quite massive, but the Siberian physicists followed another hypothesis, according to which these particles are only two to ten times more massive than protons. The best way to search for dark matter particles is to use detectors based on liquefied noble gases, such as argon or neon

The brightest minds of humanity have always struggled with the question of how the Universe works. Eventually, the joint efforts of theoreticians and experimentalists have created a consistent picture based on elementary particle physics. According to modern views, ordinary matter makes up less than 5% of all mass in the Universe; the rest is the so-called dark matter and dark energy. Today researchers at major science centers around the world are designing detectors to capture the elusive dark matter particles by applying different physical principles. Developing a method for detecting these particles and creating the necessary instruments is the key task of the Laboratory of Cosmology and Elementary Particle Physics, which was organized in 2011 at Novosibirsk State University with the financial support of the Russian government.

In 2014, the laboratory, which is led by Prof. Alexander Dolgov (University of Ferrara, Italy), was among the winners of the fourth open competition for research grants of the Russian Government

Dark matter is remarkable in that no one knows what it is. Astrophysics and cosmology say nothing about its nature; there are only hypotheses. The first step in searching for dark matter particles is to create a method for “finding the unknown.” Physicists with an interest in the field are looking in all possible directions to explore the widest range of possibilities.

There are several models of dark matter. What we are looking for is the so-called cold dark matter: having been slow at first, its particles have now accelerated in the galaxy’s gravitational field to one thousandth of the speed of light. The range of possible masses of these particles is extremely wide: they might be both three orders of magnitude heavier and twelve orders of magnitude lighter than protons. Moreover, according to an alternative model, cold dark matter could be composed of tiny, micron-sized black holes that are comparable in mass with a mountain range. There are also other hypotheses, e.g., that of hot dark matter: having been traveling at near-light velocities in the early Universe, its particles must have now decelerated to one hundredth of the speed of light.

There are several models of dark matter. What we are looking for is the so-called cold dark matter: having been slow at first, its particles have now accelerated in the galaxy’s gravitational field to one thousandth of the speed of light. The range of possible masses of these particles is extremely wide: they might be both three orders of magnitude heavier and twelve orders of magnitude lighter than protons. Moreover, according to an alternative model, cold dark matter could be composed of tiny, micron-sized black holes that are comparable in mass with a mountain range. There are also other hypotheses, e.g., that of hot dark matter: having been traveling at near-light velocities in the early Universe, its particles must have now decelerated to one hundredth of the speed of light.

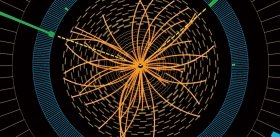

Recently, a popular and beautiful supersymmetry-based model of dark matter has somewhat lost its popularity. It predicted the existence of stable particles approximately three orders of magnitude more massive than protons. The majority of scientists had expected to find dark matter in exactly this form. However, dedicated experiments at the Large Hadron Collider showed that particles with these parameters are likely not to exist in nature. So far this fact has been the only meaningful finding on the difficult path of searching for the “dark side” of the Universe.

Argon trap

Today there are dark matter detectors based on different physical principles. Drawing on their own understanding of the properties of this matter, scientists use different approaches to make the detectors more sensitive and selective.

Today the majority of physicists believe that dark matter particles must be fairly massive (on the order of hundreds of proton masses); therefore, it is better to trace them using heavy recoil nuclei. The best detectors to search for these particles are those using liquefied xenon as the working fluid: there are already several giant facilities operating globally, each of which containing hundreds of kilograms of this inert material.

However, in our work we followed another hypothesis, according to which dark matter particles are only two to ten times more massive than protons. In this case, the heavy recoil nuclei are not very effective and should be replaced by the lighter nuclei of noble gases such as argon or, even better, neon. Currently, scientists prefer argon because it is less expensive and more available; the use of this substance offers prospects of creating detectors of a larger volume, which will increase the chances of finding dark matter particles.

However, in our work we followed another hypothesis, according to which dark matter particles are only two to ten times more massive than protons. In this case, the heavy recoil nuclei are not very effective and should be replaced by the lighter nuclei of noble gases such as argon or, even better, neon. Currently, scientists prefer argon because it is less expensive and more available; the use of this substance offers prospects of creating detectors of a larger volume, which will increase the chances of finding dark matter particles.

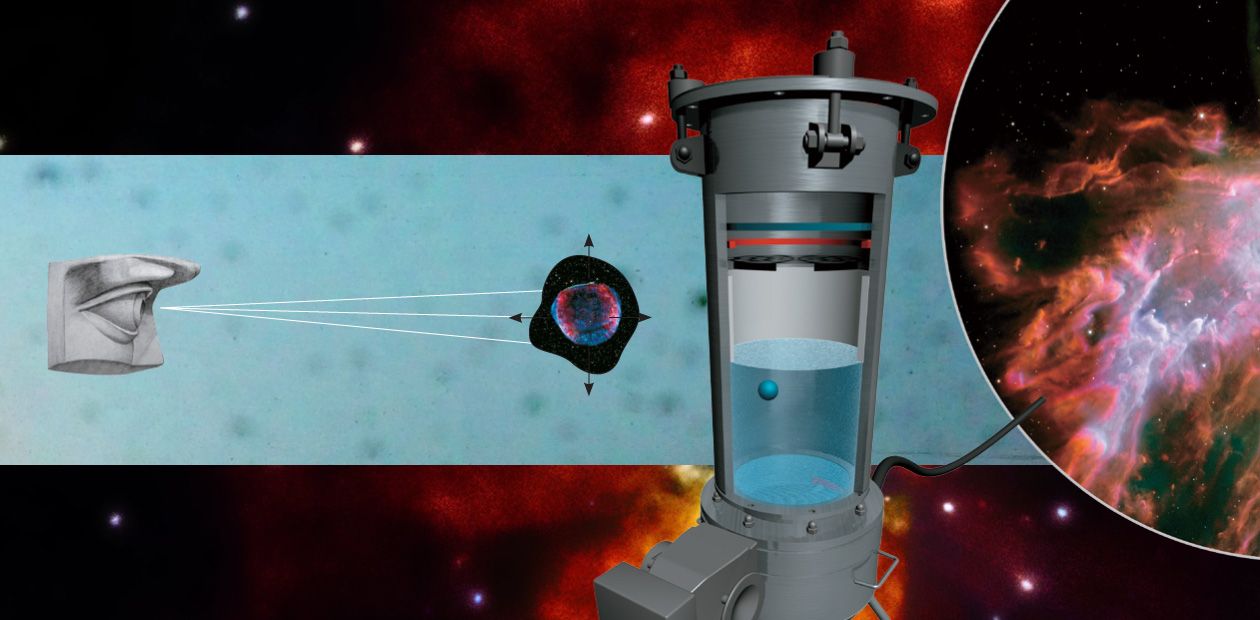

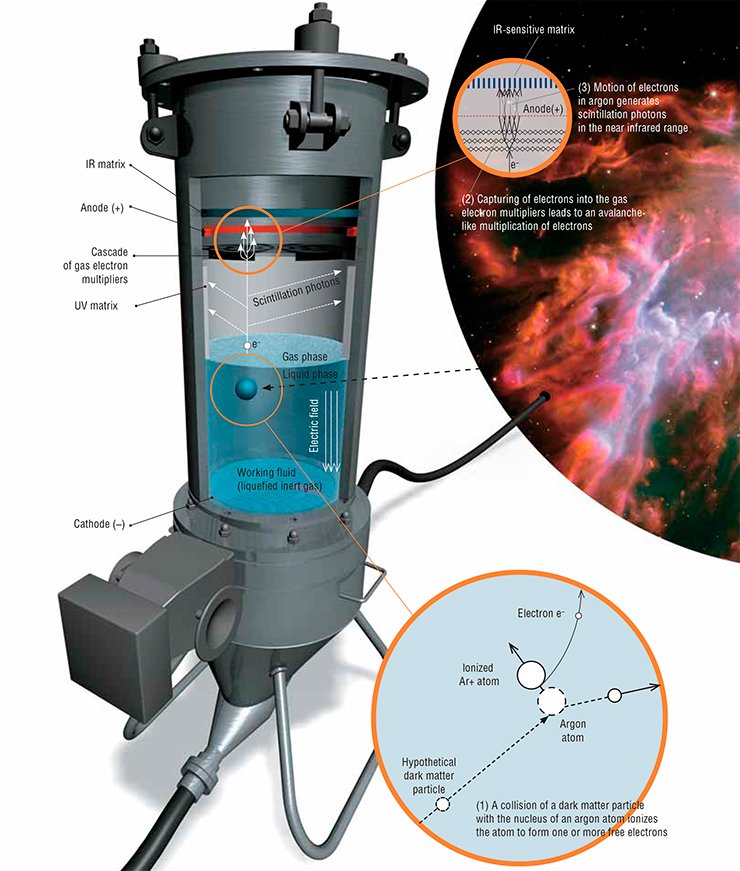

In elementary particle detectors based on noble gases, which are kept in a cryochamber in a two-phase (liquid–gas) state, dark matter particles interact with the atoms of the working substance. The ensuing recoil nuclei generate scintillation signals, which are registered by special sensors. A collection of these signals is analyzed to find the event that triggered the formation of the recoil nucleus and determine the properties of the particle responsible for the event.

The high-sensitivity two-phase cryogenic avalanche detector of dark matter, which is being developed at the NSU Laboratory of Cosmology and Elementary Particle Physics is intended for the detection of particles two to ten times heavier than protons. The researchers have already created a prototype detector with a capacity of eight liters of liquid argon and the first working facility with a 160-liter cryochamber; the instrument is being calibrated using slow monochromatic neutrons of known energy

At first we designed a small prototype (sized as a ten-liter bucket) to prove the fundamental possibility of creating a functional detector based on liquid argon. Then we made a “barrel” ten times larger in volume to investigate the operational features of the detector in more detail.

Model experiments use neutrons of small energies, which are scattered on argon nuclei to create effects similar to those that should accompany the scattering of dark matter particles. In this respect our laboratory has a unique advantage since researchers of the Institute of Nuclear Physics (Novosibirsk) have designed and created a source of monochromatic neutrons* of the required energy, which can be used to calibrate the instrument.

Model experiments use neutrons of small energies, which are scattered on argon nuclei to create effects similar to those that should accompany the scattering of dark matter particles. In this respect our laboratory has a unique advantage since researchers of the Institute of Nuclear Physics (Novosibirsk) have designed and created a source of monochromatic neutrons* of the required energy, which can be used to calibrate the instrument.

A facility containing a hundred liters of liquid argon is already a substantial engineering project. Therefore, it is not surprising that we are faced with technical issues at this stage, which need to be resolved. One of the issues relating to large volume is that the recoil nucleus stops in the working fluid before reaching the sensing element. This calls for a procedure to reliably register the very small energy that is released during the deceleration. In other words, we are looking for optimal engineering solutions to achieve a maximum possible increase in the sensitivity and reliability of the detector.

Research underground

Strictly speaking, we are now only at the beginning of our journey toward creating a dark matter detector of a substantial volume, at least three hundred liters of argon. Moreover, this facility must be placed into a deep cave to eliminate the effects of the cosmic ray background.

It is a known fact that all commonplace structures built of natural materials, e.g., concrete, always contain, albeit in small quantities, radioactive atoms, which gradually decay to form radon. The latter is one of the most hazardous elements interfering with the detection of dark matter because this gas penetrates into the tiniest voids of the detector facility and, decaying, creates a radioactive background that is very hard to eliminate. Therefore, not every underground cave is suitable for a dark matter detector.

The detector should also be made of low radioactivity materials. Fortunately, there exist technologies for the production of these materials; nevertheless, it will take time to resolve this and other such technical issues. Note also that argon, which is currently obtained from atmospheric air, contains a radioactive isotope (which is constantly renewed through the action of cosmic particles) with a lifetime of around 300 years. However, not only air can be used as a source of argon but also natural gas that has been buried for millions of years and, thus, not subjected to cosmic radiation; therefore, the radio isotope content of this argon is lower by several orders of magnitude.

Terrestrial applications

A liquid argon detector of dark matter particles may also be used successfully in other fields, offering a broad range of potential applications. For instance, it can be used to study coherent neutrino scattering, a process that no one has yet been able to observe in experiment. This information would be particularly interesting not only for basic research but also for practical purposes, since it can be used to design a remote diagnostics system for nuclear reactors.

IN SEARCH OF ANTIMATTER We know that, within distances of at least 30 million light years, the Universe around us is dominated by ordinary matter, which is composed of electrons, protons, and neutrons. However, we also know that there are antiparticles, i.e., positrons, antiprotons, and antineutrons, whose electric charge and magnetic moment have the opposite sign. There is compelling evidence of the existence of these elementary particles, but their proportion is very small, three orders of magnitude smaller than that of ordinary matter. It is already half a century ago that Academician A. D. Sakharov formulated a very beautiful theory to explain the imbalance between matter and antimatter in the Universe.Theoretically, there could exist any antinuclei corresponding to all of the known atomic nuclei. The light antideuterium and antihelium antinuclei can form due to collisions of energetic cosmic rays. More complex antinuclei might also form in these reactions, but the heavier the nucleus, the less likely it is to be born. Moreover, the probability of formation of antihelium, which consists of four antinucleons only, is negligibly small.

Finding natural antihelium would be a very strong argument for the existence of antiworlds, i.e., worlds similar to ours yet composed of antimatter (antinuclei). Thus, scientists are vigorously looking for traces of antimatter: now there are three major projects using different instruments to search for antihelium. The Japanese researchers are looking for antiparticles in cosmic radiation by launching science payloads on balloons at high altitudes in the upper atmospheric layers. The joint Russian–Italian project uses a dedicated detector onboard an Earth-orbiting satellite. The US scientists have designed and launched into space an antimatter spectrometer by using a written-off three-ton magnet from CERN. There are also two higher-sensitivity experiments currently in development, but the starting time of these projects is still unclear.

The researchers of the NSU Laboratory of Cosmology and Elementary Particle Physics consider models suggesting that there is a substantial amount of antimatter in the Universe and, moreover, not tens of millions of light years away, but much closer, even in our Galaxy. They have derived interesting observable inferences, which might help to discover cosmological antimatter and, in particular, antistars.

Nuclear power plants are said to generate electricity. However, they can simultaneously be used as a source of plutonium for nuclear weapons. Preventing the export of nuclear waste in the form of weapons-grade plutonium is far from being an easy task. However, if placed near the reactor, the detector can constantly monitor antineutrino fluxes and inform on what is produced in the reactor apart from electricity. There is no such equipment yet, but our laboratory has developed a technique to create a small-sized detector that can monitor autonomously, without a human operator, the times when the reactor is started and when it is stopped to reload nuclear fuel.

Instruments of this kind can also be used for medical purposes, e.g., for positron emission tomography.

The expanding Universe

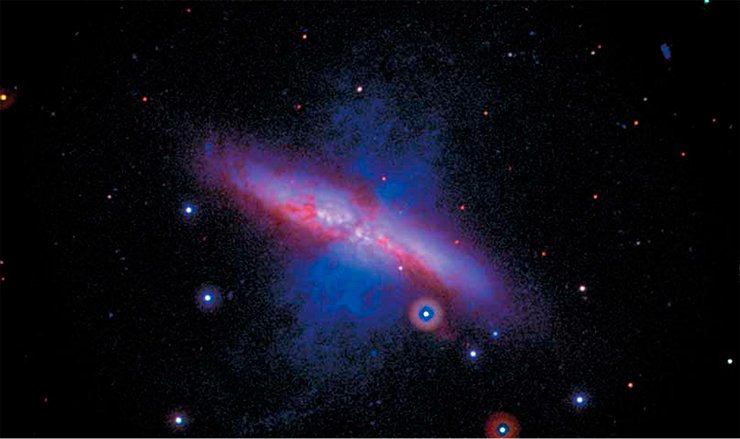

As mentioned above, designing a method to search for dark matter is the key task of the Laboratory of Cosmology and Elementary Particle Physics, which was established with the support of the government mega-grant program, but by far not the only one. The laboratory, as its name suggests, deals not only with problems relating to microcosm but also with macroscopic objects, such as stars. We have also developed a new method for determining cosmological distances with the help of type IIn supernovae.

It should be noted that astrophysicists have good knowledge about another type of supernovae—type Ia, which are born from the explosion of white dwarfs that have gained huge mass to reach their limit. Their peak radiation powers differ in different explosions, but astronomers are able to estimate them from the rate of decline of the radiation flux after the explosion. Thus, the stellar light flux that reaches us provides enough information to reliably calculate the distance to the stars: given that we know the power of the flare at its peak, the farther the star, the weaker its light flux. Then we can compare the so-called redshift in the radiation spectrum of a supernova and the distance to the star with the theoretical predictions.

Generally speaking, it is quite simple to measure a stellar spectrum given a sufficient quantity of the arriving photons; therefore, astronomers can reliably determine the red- or blueshift in the spectrum of a sufficiently bright star (not necessarily a supernova).

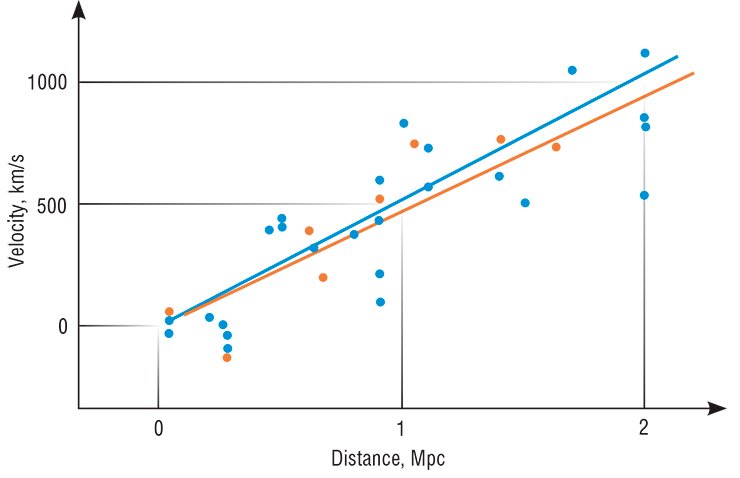

It is very difficult to discern individual stars even in the nearby galaxies since very few photons are coming from there. However, we can measure the shift of spectral lines in the spectra of whole galaxies! Their light is composed of the photon fluxes from the billions of stars in the galaxies.

As early as at the beginning of the previous century, scientists learned how to measure the line shifts in the spectra of galaxies, and they discovered that the spectra of all galaxies farther away than our close neighbor M31 in the constellation Andromeda show only a redshift—there is no blueshift at all! Furthermore, the more distant a galaxy, the greater its redshift. The ratio between a galaxy’s redshift and the distance to this galaxy differs only slightly for different galaxies, and the average ratio multiplied by the speed of light (the so-called Hubble parameter) shows the relative rate of expansion of the Universe.

In 1930 at a meeting of the Royal Astronomical Society, the well-known authorities in cosmology Eddington and de Sitter recognized that the popular de Sitter’s model of the Universe could not explain the linear trend discovered by Hubble. Some time later, Lemaître drew Eddington’s attention to the 1927 paper in which the Belgian astronomer proposed a model of an expanding universe, and Eddington perceived the idea as a revelation. The next one was de Sitter, who described the idea of an expanding universe as an eye-opener.

Einstein was opposing the new theory longer than the other scientists, but he also reversed his position eventually. This was partly attributed to the publishing of Hubble’s findings and the fact that in the same year Eddington proved that static solution proposed by Einstein himself was unstable even for a positive cosmological constant.

At the beginning of 1931, Einstein went to the Mount Wilson Observatory (California) to meet Hubble in person and discuss his results. On returning to Berlin, Einstein wrote a paper in which he accepted the theory of an expanding universe, emphasizing the pioneering role of Friedmann, and suggested that his longtime “foe”—cosmological constant—should be excluded from general relativity.

Recall that it was almost half a century before the discovery of the accelerating expansion of the Universe. Not surprisingly, Einstein believed that the model of an expanding universe—a solution derived from Friedmann’s theory given a zero cosmological constant—is the only correct description of the Universe.

In 1932 Einstein and de Sitter published a joint work in which they suggested that the curvature of the Universe as well as the cosmological constant should be set at zero and priority should be given to a flat model. It is this model that set a framework for the theory of an expanding universe for decades to come. Almost until the end of the twentieth century, models with a nonzero cosmological constant were barely, if at all, discussed in cosmology textbooks, which only mentioned them in the notes.

However, with observational technology improving, scientists discovered that the light fluxes from very distant supernovae, whose light had traveled for billions of years before reaching us, are fainter than predicted by the general theory. This could mean that the distances to these stars are larger than we thought. This conclusion was confirmed by analyzing a collection of astronomical data. A possible explanation could be that the expansion of the Universe began to accelerate at some point in time.

REPULSION INSTEAD OF ATTRACTION The work plan of the NSU Laboratory of Cosmology and Elementary Particle Physics includes another intriguing area of cosmological research—studies of modified gravity. The point is that the accelerating recession of the Universe can be described not only by the dark matter hypothesis but also by a fundamentally different one, i.e., the modified law of gravity.This hypothesis says that gravity potential is inversely proportional to distance only at moderate distances comparable with the size of the local galaxy cluster. Physicists have already proven that this law works well at these distances. However, at larger distances the law may have a different, “modified” form. Clearly, this hypothesis is just a theoretical speculation because it is very difficult to reliably detect this effect by observing distant galaxies.

The pilot’s books of the past warned about the need to await horrible things when facing the unknown. What “horrible things” can we await from the perspective of classical physics? It might be that at certain distances we have gravitational repulsion instead of gravitational attraction. The equations of motion of general relativity are somehow transformed to show that at some point the decelerating expansion of the Universe gives way to accelerating recession. This conclusion is confirmed by recent astronomical data.

Moreover, the modified law of gravity leads to highly interesting predictions, which can be confirmed experimentally. For example, the curvature of space begins to oscillate at a high frequency, which leads to the generation of elementary particles with a mass corresponding to the oscillation frequency (Arbuzova et al., 2012).

These findings led to the conclusion about the accelerating expansion of the Universe. In 2011 the American astrophysicists S. Perlmutter, B. P. Schmidt, and A. G. Riess received a Nobel Prize for this truly outstanding discovery. By the way, in modern theories the accelerating recession of galaxies is associated with the concept of dark energy.

These findings led to the conclusion about the accelerating expansion of the Universe. In 2011 the American astrophysicists S. Perlmutter, B. P. Schmidt, and A. G. Riess received a Nobel Prize for this truly outstanding discovery. By the way, in modern theories the accelerating recession of galaxies is associated with the concept of dark energy.

Supernovae becoming closer

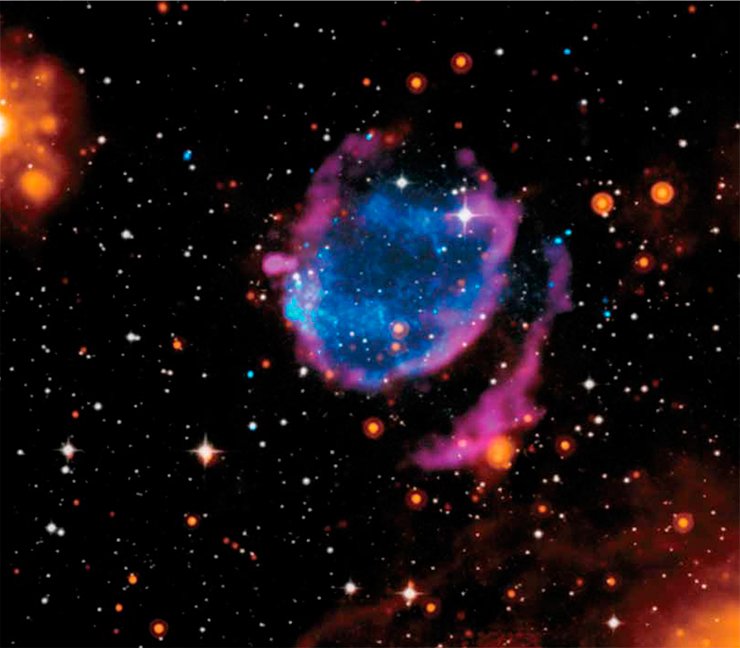

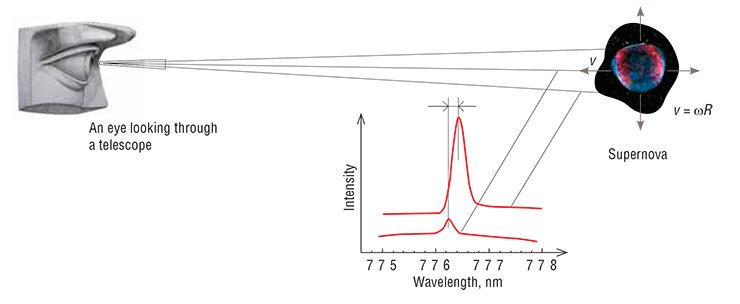

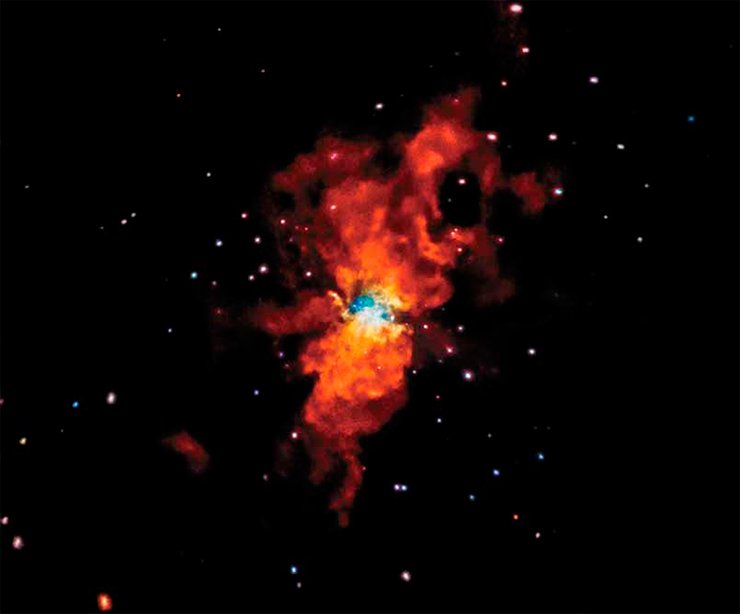

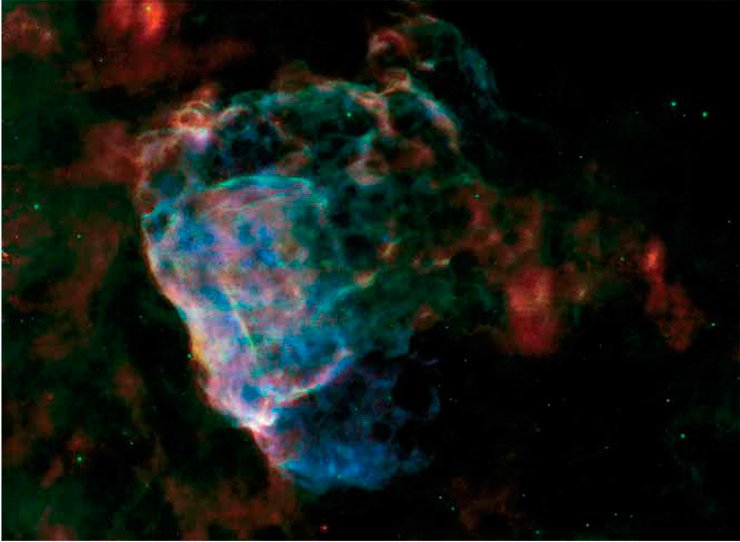

To confidently determine the distances to remote galaxies, researchers need new methods, which would not rely on the cosmic distance ladder, since this scheme is prone to poorly controlled errors. And this is where type IIn supernovae come to the fore—these stars are two orders of magnitude brighter than type Ia supernovae and emit light for longer periods of time, i.e., for months or even years, while going through a series of explosions. This phenomenon was investigated in our laboratory by a group of researchers led by Prof. S. I. Blinnikov from the Institute for Theoretical and Experimental Physics (ITEP, Moscow). The researchers proposed a model for determining cosmological distances with no additional assumptions. According to this model, the luminous shell of such an exploding star has a spherical shape, which expands almost isotropically with time. The spectral shift of that part of the shell that is moving directly toward us can be used to determine the speed of its recession, and the measured angular size of the shell and velocity can help determine its radius. Knowing the radius and its terrestrially observed angular size, researchers can calculate the distance to the star (Blinnikov et al., 2012).

This fundamentally new method allows researchers to substantially reduce systematic errors in the determinations of the Hubble parameter and refine its value. This, in turn, would allow them to refine the equation for dark energy and find out, in particular, whether it is the same as vacuum energy (otherwise referred to as the cosmological constant) or has a different nature.

This fundamentally new method allows researchers to substantially reduce systematic errors in the determinations of the Hubble parameter and refine its value. This, in turn, would allow them to refine the equation for dark energy and find out, in particular, whether it is the same as vacuum energy (otherwise referred to as the cosmological constant) or has a different nature.

However, due to possible measurement errors, achieving an acceptable accuracy would require observations of hundreds or even thousands of stellar objects, and this will be our main task for the near future. Unfortunately, in the entire history of stellar observations, there have been only a little more than a dozen of explosions of type IIn supernovae. Thus, now we do not have enough data for generalizations. Nevertheless, new telescopes are being invented, which support ongoing all-sky surveys by recording all the incoming data.** Then, it will be the job of the astrophysicists to analyze the gigantic array of data in order to find and accurately classify the events, process the time-resolved emission spectra, and, eventually, give results in the form of values of the Hubble parameter, depending on the refined distances to the objects. Thus, our observers have work to do for years to come.

Experiment and theory are equally important because researchers exchange ideas while discussing scientific results. The undergraduate and postgraduate students that are currently studying at our laboratory have an opportunity to get a comprehensive education both in theoretical and experimental fields. They also have a great chance of gaining a deep insight into the analysis and processing of astrophysical data, which are now broadly available.

Physicists understand that it is impossible to create a universal detector of elementary particles, including dark matter particles. It cannot be ruled out that the way chosen by the Novosibirsk laboratory will not lead to success. This may happen if the particles turn out to be completely different from what we expect: much heavier or, on the contrary, much lighter. The liquid argon detector that is being created, like any other instrument, is able to trace only a limited range of particle masses. However, the researchers in the laboratory believe that their strategy is right and focus on the currently relevant tasks.

The government mega-grant program has provided funding for three years, which is, undoubtedly, insufficient for such a fundamental research project, which will require more time and material resources. Transforming the idea into a working instrument, which means resolving all technical issues and finding all of its potential applications, may take years or even decades.

The government mega-grant program has provided funding for three years, which is, undoubtedly, insufficient for such a fundamental research project, which will require more time and material resources. Transforming the idea into a working instrument, which means resolving all technical issues and finding all of its potential applications, may take years or even decades.

Research teams from different countries, which design facilities to search for dark matter particles, are undoubtedly engaged in a scientific rivalry. It is now impossible to tell if any of them has already devised an instrument that meets all the requirements to dark matter detectors. But this area of basic research is open to everyone. All the results are regularly published; researchers meet at conferences to exchange ideas and opinions. In science it is often that someone makes the first step and others take up the baton, but it is moving forward anyway.

*For more detail see Science First Hand, 2012, No. 2 (32), pp. 88—95.

**For more detail about all-sky telescopes see Science First Hand, 2014, No. 1 (37) , pp. 111—112.

References

Dolgov A. D., Cosmology and elementary particles, or celestial mysteries // Phys. Part. Nucl. 2012. Vol. 43. No. 3. pp. 273–293.

Dolgov A. D. Cosmology: from Pomeranchuk to the present day // Phys. Usp. 2014. Vol. 57. pp. 199–208.

Bondar A. E., Buzulutskov A. F., Dolgov A. D., et al. Proekt dvukhfaznogo kriogennogo lavinnogo detektora dlya poiska temnoi materii i registratsii nizkoenergeticheskikh neitrino // Vestn. Novosib. Gos. Univ. Ser. Fiz. 2013. Vol. 8. No. 3. pp. 13–26.

Bondar A. E., Buzulutskov A. F., Dolgov A. D., et al. Issledovanie kharakteristik dvukhfaznogo kriogennogo lavinnogo detektora v argone // Vestn. Novosib. Gos. Univ. Ser. Fiz. 2013. Vol. 8. No. 2. pp. 36–43.

Arbuzova E. V., Dolgov A. D., and Reverberi L. Curvature oscillations in modified gravity and high energy cosmic rays //Eur. Phys. J. C. 2012. Doc. 72:2247.

Blinnikov S., Potashov M., Baklanov P., and Dolgov A. Direct determination of the Hubble parameter using type IIn supernovae // JETP Lett. 2012. Vol. 96. No. 3. pp. 153–157.

Translated by A. Kobkova